The idea of CDC is to capture the changes undergone by

the data in a table in order to make use of those changes elsewhere. Very often

this is to update a data warehouse with just the changes rather than completely

refreshing it. This post is designed to lead you through a simple example

so that you get a good feel of how to create and use CDC.

A word of warning before we start off - this is going to be a long one!

Create Your Environment

For this example we start by creating a new database and

a new table.

1.

Create the database

create database NWM

2.

Make sure that the SQL Server Agent is running

for the database instance

3.

Enable CDC for that new database

exec sys.sp_cdc_enable_db

4.

Check results

select name, is_cdc_enabled from sys.databases

5.

You will get a list of databases and an is_cdc_enabled

flag. In the example

shown the NWM database is enabled.

6.

Next, create

a table to set up for CDC. The table must have a primary key. For convenience

here we make it an identity field.

( id int identity(1,1),

field_1 varchar(32),

field_2 varchar(32),

constraint [PK_nwm_cdc] primary key clustered ([id] asc) on [PRIMARY]

)

7.

Add some data to the table:

insert into nwm_cdc

select 'one', 'ein'

8.

Check the list of CDC-enabled tables in the

database. You will find that you are returned an empty dataset.

select * from cdc.change_tables

Set up CDC for your table

In this section you prepare the data table where changes will cause CDC activity.

1.

Set up CDC for the table by running this system

procedure:

exec sys.sp_cdc_enable_table

@source_schema = N'dbo', --schema of table with data@source_name = N'nwm_cdc', --name of table with data

@role_name = null, --role of table owner (optional)

@captured_column_list = N'id, Field_2',--Fields to capture when changed

@capture_instance = null, --Name of the CDC “capture instance”

@filegroup_name = N'PRIMARY', --filegroup to keep output in

@supports_net_changes = 1 --1 = merge changes on the same field

2.

Re-run step

8 above. You will now get what you see below. Notice that many of the values

are taken from the command in step 1 just above.

the sys.databases view.

b. object_id is the id of the

capture_instance that we have just createdc. schema_id is the id of the schema of the table we referenced in step 8, as found in

the sys.schemas view.

d. capture_instance is the name of the CDC setup that we have just created.

There can be two per data table, and each must have a unique

name – the default is the table’s own name with _cdc added.

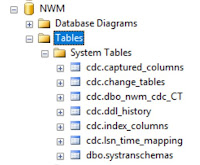

3. Now look at the System Tables branch under your database

in the SSMS Object Explorer:

cdc. The one that you have just made by performing step 1

just above is cdc.dbo_nwm_cdc_CT, and this will collect the

data generated by the CDC system whenever the table is

changed.

4. Now go further down in the Object Explorer and open up the SQL Server Agent tree.

It should look like this (right). Two new jobs have been created in order to hold the code necessary

for processing the CDC operations.

Note If you wish

to disable CDC on a table then you use sys.sp_cdc_disable_table, providing it the @source_schema, @source_name, and @capture_instance values to identify the Capture Instance.